[ad_1]

On the core of Grammarly is our dedication to constructing secure, reliable AI techniques that assist individuals talk. To do that, we spend a number of time fascinated by tips on how to ship writing help that helps individuals talk in an inclusive and respectful approach. We’re dedicated to sharing what we study, giving again to the pure language processing (NLP) analysis neighborhood, and making NLP techniques higher for everybody.

Lately, our analysis staff printed Gender-Inclusive Grammatical Error Correction By Augmentation (authored by Gunnar Lund, Kostiantyn Omelianchuk, and Igor Samokhin and offered within the BEA workshop at ACL 2023). On this weblog, we’ll stroll by way of our findings and share a novel approach for creating an artificial dataset that features many examples of the singular, gender-neutral they.

Why gender-inclusive grammatical error correction issues

Grammatical error correction (GEC) techniques have an actual influence on customers’ lives. By providing ideas to right spelling, punctuation, and grammar errors, these techniques affect the alternatives customers make after they talk. In our analysis, we investigated how hurt might be perpetuated inside AI techniques, and approaches to assist alleviate these in-system dangers.

First, hurt can happen if a biased system performs higher on texts containing phrases of 1 gendered class over one other. For example, think about a system that’s higher at discovering grammar errors for textual content containing masculine pronouns than for female ones. A person who writes a letter of advice utilizing female pronouns might need fewer corrections from the system and find yourself with extra errors, leading to a letter that’s much less efficient simply because it incorporates she as an alternative of he.

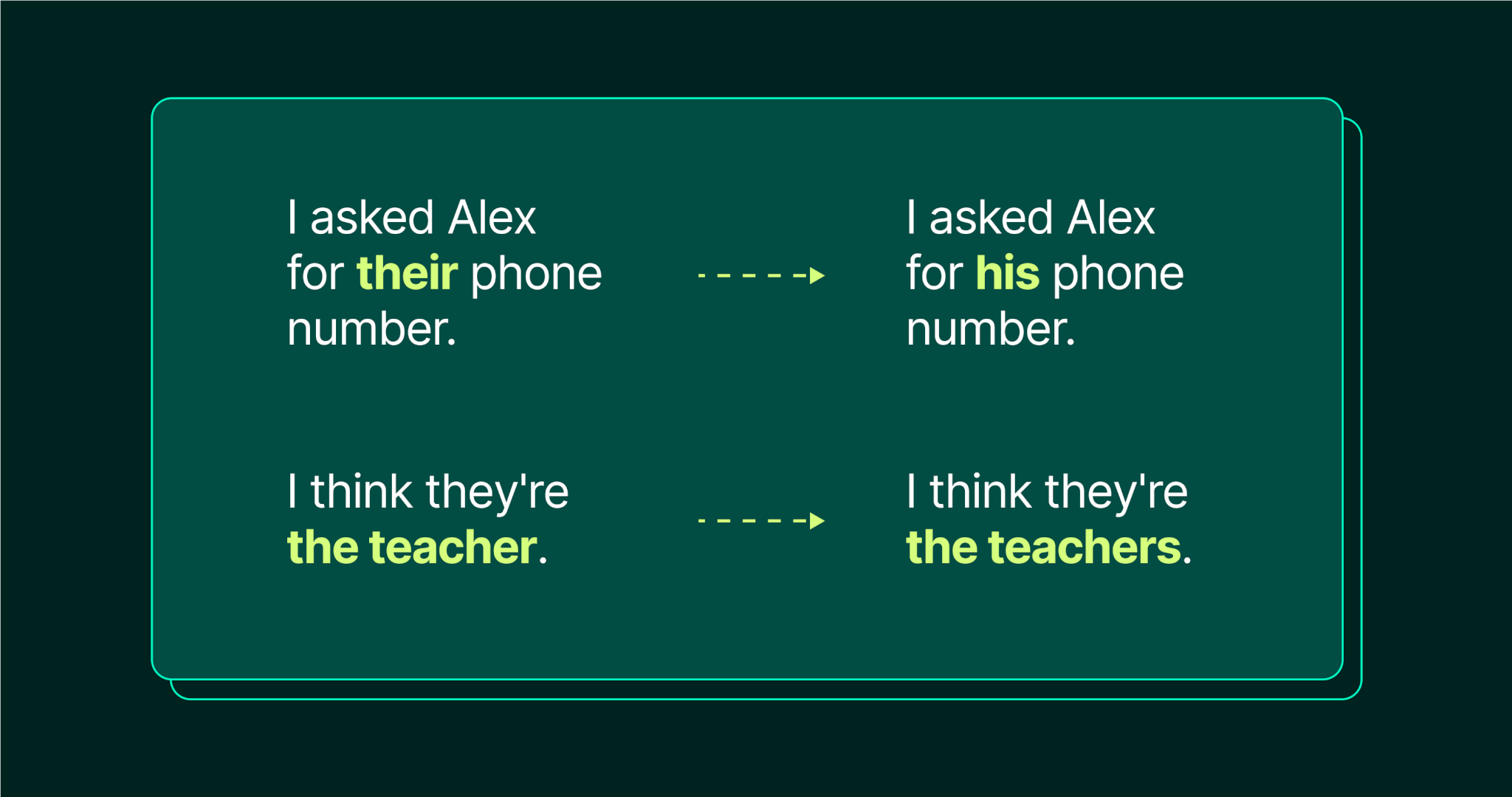

The second sort of hurt we investigated occurs if a system gives corrections that codify dangerous assumptions about explicit gendered classes. This could embody the reinforcement of stereotypes and the misgendering or erasure of people referred to within the person’s textual content.

Examples of dangerous corrections from a GEC system. The primary is an occasion of misgendering, changing the singular they with a masculine pronoun. The second is an occasion of erasure, implying that they will solely be used accurately as a plural pronoun.

Mitigating bias with information augmentation

We hypothesized that GEC techniques can exhibit bias resulting from gaps of their coaching information, corresponding to a scarcity of sentences containing the singular they. Knowledge augmentation, which creates extra coaching information based mostly on the unique dataset, is one approach to repair this.

Counterfactual Knowledge Augmentation (CDA) is a way coined by Lu et al. of their 2019 paper Gender Bias in Neural Pure Language Processing. It really works by going by way of the unique dataset and changing masculine pronouns with female ones (him → her) and vice versa. CDA additionally swaps gendered frequent nouns (corresponding to actor → actress and vice versa) and has been prolonged to swap female and masculine correct names as nicely.

To our data, CDA had by no means earlier than been utilized to the GEC activity specifically, solely to NLP techniques extra usually. To check feminine-masculine CDA approaches for GEC, we produced round 254,000 gender-swapped sentences for mannequin fine-tuning. We additionally created a lot of singular-they sentences utilizing a novel approach.

Creating singular they information

Our paper is the primary to discover utilizing CDA methods to create singular-they examples for NLP coaching information. By changing pronouns like he and she within the authentic coaching information with they, we created 63,000 extra sentences with singular they.

To create sentences that comprise the singular they, we first needed to determine how the singular they differs from the plural they, since our information incorporates a number of sentences with the plural they already. Particularly, singular they is distinguished from plural they in that singular they refers to a single individual, whereas plural they refers to a number of individuals. The central downside that we resolve, then, is making certain that we find yourself with a dataset containing cases of the singular they whereas preserving unchanged any present makes use of of the plural they.

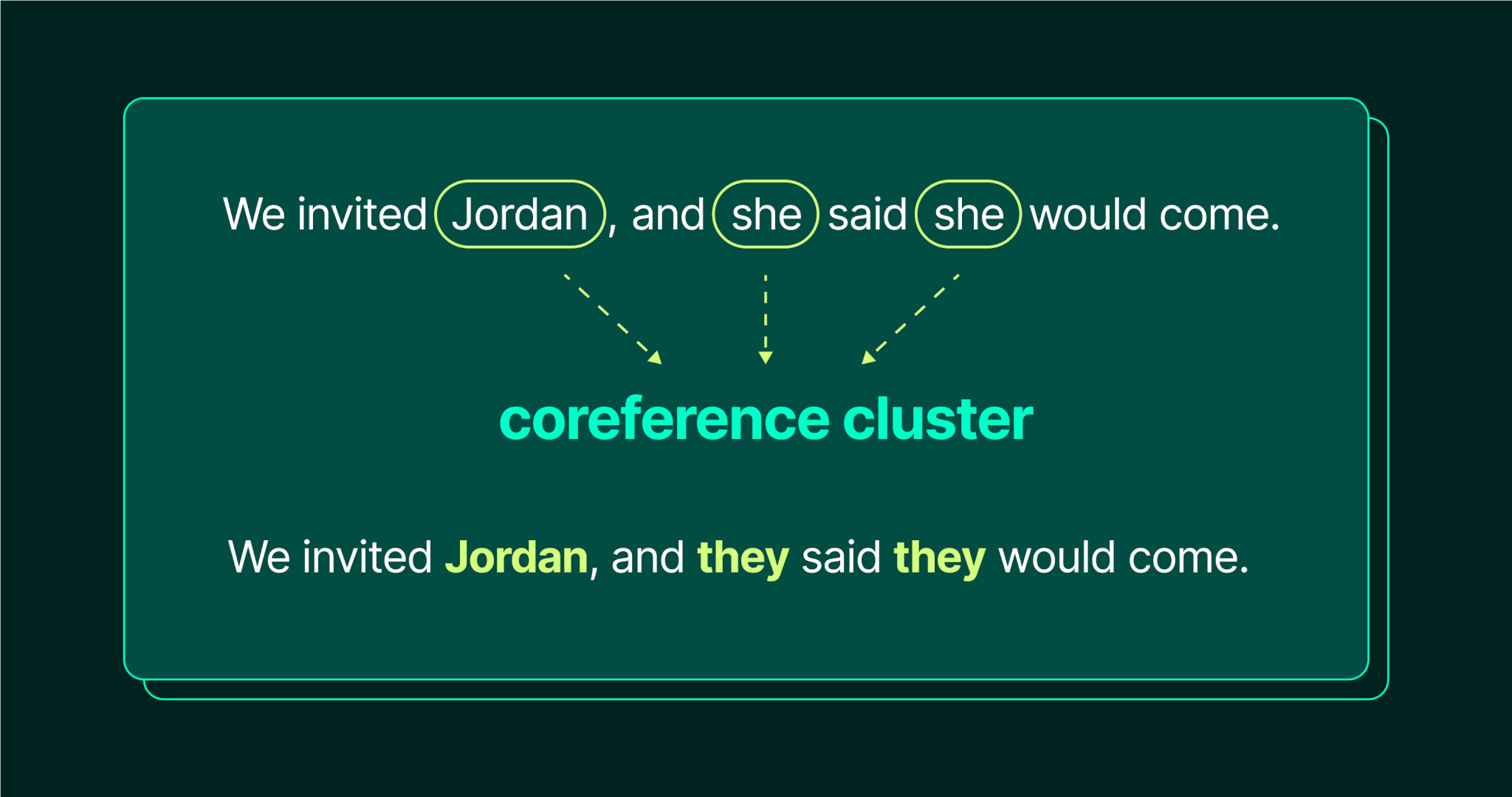

To realize this, we first wanted a approach to determine coreference clusters within the supply textual content. In linguistics, coreference clusters are composed of expressions that seek advice from the identical factor. For instance, within the sentence “We invited Jordan, and she or he stated she would come,” “Jordan” and the 2 extra “she”s can be a part of the identical coreference cluster. We used HuggingFace’s NeuralCoref coreference decision system for spaCy to determine clusters. From there, we recognized whether or not the antecedent (the unique idea being referenced, corresponding to “Jordan”) was singular. In that case, we changed the associated pronouns with “they.”

As well as, they has totally different verbal settlement paradigms than he or she: as an example, “she walks” versus “they stroll.” We used spaCy’s dependency parser and the pyinflect bundle to make sure our swapped sentences had the suitable subject-verb settlement.

Outcomes

We evaluated a number of state-of-the-art GEC fashions utilizing qualitative and quantitative measures, each with and with out our CDA augmentation for feminine-masculine pronouns and singular they. Our analysis dataset was created utilizing the identical information augmentation methods mentioned above and manually reviewed for high quality by the authors.

We investigated the techniques for gender bias by testing their efficiency on our analysis dataset with none fine-tuning.

- For feminine-masculine pronouns, we didn’t discover proof of bias. Nonetheless, different sorts of gender bias exist in NLP techniques—learn our weblog publish on mitigating bias in autocorrect for an instance.

- In distinction, for singular they, we discovered that bias exists and it’s important. For the GEC techniques we examined, there was between a 6 and 9 p.c discount in F-score (a metric combining precision and recall) on an analysis dataset augmented with a lot of singular-they sentences, in comparison with one with out.

After fine-tuning the GECToR mannequin with our augmented coaching dataset, we noticed a considerable enchancment in its efficiency on singular-they sentences, with the F-score hole shrinking from ~5.9% to ~1.4%. Please evaluation our paper for a full description of the experiment and the outcomes.

Conclusion

Knowledge augmentation may help make NLP techniques much less biased, but it surely’s not at all an entire answer by itself. For our Grammarly product choices, we implement many methods past the scope of this analysis—together with hard-coded guidelines—to guard customers from dangerous outcomes like misgendering.

We imagine it’s essential for researchers to have benchmarks for measuring bias. For that purpose, we’re publicly releasing an open-source script implementing our singular-they augmentation approach.

Grammarly’s NLP analysis staff is hiring! Take a look at our accessible roles right here.

[ad_2]